When you install Azure Stack Development Kit it is a completely isolated service with multiple networks. It means that your Azure Services (such as ADFS, the portal and AD) are not available outside of the box at all. But what if you wanted to use the Azure Stack DK from multiple computers? or in our case, use it in conjunction with other Active Directories for login information. Then you need to remove the NAT from the installation and ensure you have a fully routable connection.

In this post, we will be switching the network mode, so you can have a direct connection into the Azure Stack services from all other computers in your network and therefore be able to use the Portal and other services from various machines.

[update 7/9/2019] As always, things seem to change.. and so does Azure Stack Development Kit.. In the later versions of the product, the AzS-BGPNAT VM does not exist anymore. The NAT rules and routing is done by the host itself. Luckily, the product did not change in the way it performs the NAT or the underlying technology, they just removed the BGPNAT VM. So this topic has to be updated slightly. Instead of issuing the commands on the VM, you can run the get-netnat | remove-netnat and the new-newipaddress commands from a PowerShell window on the host computer itself, and it will still work. When you update the routing tables, you point the gateway for the 192.168.200.0/24 address space to the IP address of the ASDK host.

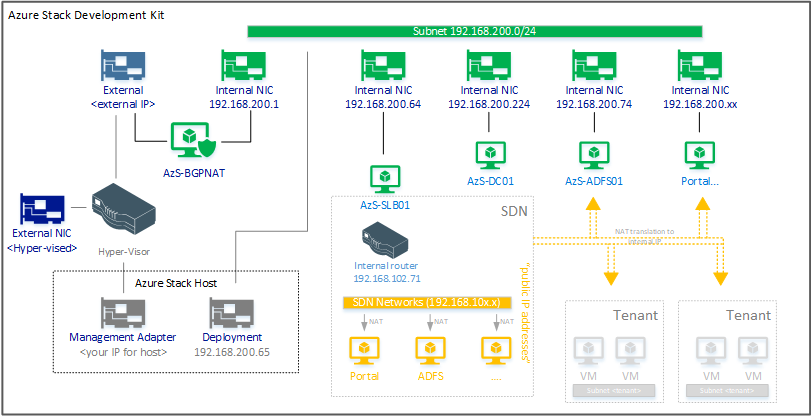

Let’s look at the architecture first:

[update 7/9/2019] the AzS-BGPNAT does not exist anymore, NAT and BGP is handled by the host.

The Azure Stack host uses a single network adapter. This adapter is hyper-vised and thus turned into a Hyper-V network. The onboard adapter is then virtualized and given back to the OS as the management adapter.

Let’s call this the external network and your Azure Stack Host is connected to it as well as the AzS-BGPNAT VM with one interface. This VM controls the traffic between the host and the internal VM’s. It has two network interfaces. The second one is connected to the 192.168.200.0/24 network. The core services network for Azure stack such as Active Directory. The 192.168.200.0/24 network is also linked back to the host itself. For this a deployment network adapter is created on the host, so the host can now talk directly to all the VM’s in the 192.168.200.0/24 network. This is used for the deployment and management. Whenever you perform remote administrative tasks directly on the VM’s (or their services) the host uses that particular network adapter.

When you open the portal, all traffic goes via that deployment adapter as we will later see.

The VM’s (in the 192.168.200.0/24 range) however use the AsZ-BGPNAT VM host network for all outbound communications. The BGPNAT host machine performs NAT translation for all inbound/outbound traffic.

But there is more. While the core services are on the 192.168.200.0/24 network, the portal, the ADFS website and many other services are also published behind another virtualized network. The inner core of the Azure Stack services. When you ping the portal from the host you will notice that the IP address for that is 192.168.102.0/24. This subnet range is behind the AzS-SLB01 VM.

In fact when we look at the documentation, it states the following ranges cannot be used outside of the stack box, and thus I’m assuming in these are the networks that can be used internally behind the BGPNAT topology managed by the host:

- 192.168.200.0/24

- 192.168.100.0/27

- 192.168.101.0/26

- 192.168.102.0/24

- 192.168.103.0/25

- 192.168.104.0/25

So in theory connecting to ADFS goes like:

- ADFS server has IP 192.168.200.74

- DNS record for ADFS is 192.168.102.5

- Your external client will try to connect to 192.168.102.5

- 192.168.102.5 address is advertised as being behind the SLB (192.168.200.64) on the NATBGP

- Your client goes from: External -> BGPNAT -> SLB

- SLB then NAT’s the traffic to the endpoint: SLB->ADFS (192.168.200.74)

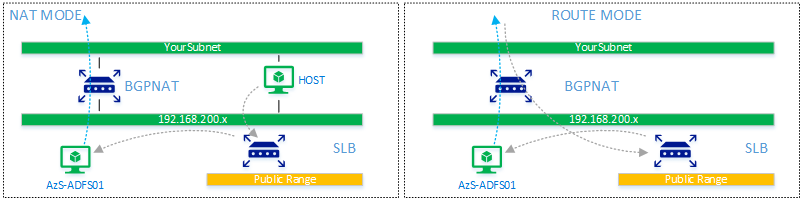

Or if you where to like bring it down completely to a (very basic) infographic it’s like:

Your request goes via the BGPNAT (which by default does NAT only for outbound connections and no inbound connections – [which we want to change] then the public IP address of the service (ADFS) is published into the BGPNAT as being on the SLB. Then the SLB does its magic and basically does NAT towards the 192.168.200.74 address.

Back to the deployment network:

Remember that the host had a deployment network in the 192.168.200.x range, this is so the host can bypass the default NAT translation that the BGPNAT is doing by default. It does not publish the internal services on the public address range (192.168.10x.x) and thus access to Azure Stack Development Kit from the outside is not directly possible. You can install VPN on the host, which essentially brings your into direct contact (routing) of the 192.168.200.x network through the host and thus also the published 192.168.10x.x addresses. But in our case we don’t want to use VPN, we want to downgrade the BGPNAT functionality, to a BGPROUTER only. It will act as the gateway and basic router for 192.168.200.x and 192.168.10x.x address spaces.

Removing NAT

So now that we know how the network works, we can start switching it to routing mode. This routing mode essentially removes the NAT from the BGPNAT machine and allows direct inbound connections.

Note that you have to make sure that in your network, you don’t use the ranges as per above as we will route it directly into the Azure Stack Development Kit box.

[update 7/9/2019] Updated to reflect the removal of BGPNAT VM, and only host based BGPNAT removal – note that you must have a console connection to the host, as you will loose your DHCP/IP address.

Disclaimer; not sure if this is the appropriate way of doing this and no support can be given obviously… If you are looking at deploying this in a non-hobby network, ask your Microsoft representative..

- Login to the console of the Azure Stack Development Kit server

- If you are using static IP’s

- Type IpConfig and write down the external IP address, subnet and gateway and the interfaceName

- Get-NetNat | Remove-NetNat

- If using DHCP:

- ipconfig /renew

- If using static IP:

- New-NetIPAddress -IPAddress ‘<your external IP>’ -AddressFamily IPv4 -Type Unicast -PrefixLength <your subnet in prefix format> -InterfaceAlias ‘<interfaceName>’ -DefaultGateway <your gateway>

Now, we’ve set the IP address for the host router and switched it to route mode already.

This completes the routing part. Now we have to perform some additional steps for your clients (outside of the Azure Stack Box) to route to the network.

In your switches / other network devices put a forwarder in to ensure you can route to 192.168.200.0/24 and 192.168.102.0/24

If you don’t have a Layer 3 switch or configurable router in your network you can adjust the routing tables in your Windows Client by opening a (administrative) command prompt:

- route add 192.168.200.0 mask 255.255.255.0 172.16.5.26

- route add 192.168.100.0 mask 255.255.255.224 172.16.5.26

- route add 192.168.101.0 mask 255.255.255.192 172.16.5.26

- route add 192.168.102.0 mask 255.255.255.0 172.16.5.26

- route add 192.168.103.0 mask 255.255.255.128 172.16.5.26

- route add 192.168.104.0 mask 255.255.255.128 172.16.5.26

(replace 172.16.5.26 with the IP address you set in the steps above – the external IP for the ASDK host)

NOTE: Since your ASDK is now in fully routed mode, it will loose internet connection if your router doesn’t have its routing tables changed. So, it’s always best to add the static routes to your router than an individual Windows client.

Next what we need to do is ensure that your Windows client also trusts the Azure Stack certificates. For this we need to add the root certificate of the created CA server into the Trusted Root Certificate store on your client.

The easiest way to retrieve these certificates is on the Azure Stack host. Login and open mmc.exe. Add the certificate snap-in by CRTL-M (of add via file menu). Open the Computer – Local Certificates snap-in and browse to Trusted Enterprise Root certificates.

From there, export the two certificates that are stored and import them into the Trusted Root Certificate Authorities on your Windows Client. If you do not import these certificates, you will receive certificate warning when connecting to the portal, but also, your portal elements will not load correctly.

Finally, we need to set the DNS. Given we have a domain controller in Azure Stack, your Windows Client can use that DNS server for all name resolutions, but if you have a local DNS server you can make a conditional forwarder:

- DNS forwarding for azurestack.local to 192.168.200.224

- DNS forwarding for internal.azurestack.local to 192.168.200.224

- DNS forwarding for local.azurestack.external to 192.168.200.224

- DNS forwarding for local.cloudapp.azurestack.external 192.168.200.224 (after installing WebApps)

Or set the DNS directly on your client to point to 192.168.200.224

Note that the local.azurestack.external and local.cloudapp.azurestack.external domains are actually hosted on 192.168.200.71, but a conditional forwarder is available on 192.168.200.224

(note replace 192.168.200.224 with the actual DNS that is set on your ASDK host, in later versions this is 192.168.200.69, but this can change. Type ipconfig /all on your ASDK host and get the actual IP address.)

And that’s it.. you are now fully connected in routing mode to Azure Stack!