In the previous post, I detailed the Server-Side-Encryption and the initial trust that someone must have in the cloud provider. I’ve also demonstrated how confidential compute helps in that trust with regards to Managed HSM and how a blind trust in a “Hold-Your-Own-Key” might be misplaced.

While server side encryption relies on the services to perform encryption/unlocking on our (applications) behalf, and that level of trust that must be placed in the software and firmware of these services, Client-Side-Encryption (CSE) is another popular method for encrypting / securing data. CSE can work alongside server-side encryption for further strengthen the data security but they can be used independently. CSE removes the need for trust in the storage/service controllers in hosting the unencrypted DEK. In a client side encryption architecture, the application itself is responsible for encrypting/decrypting/unlocking the data it uses. The application (client) can use a variety of encryption methods, including symmetric DEK+ asymmetric KEK or even simply an asymmetric key pair. The storage of these keys can be in an HSM, a Key Management Service (EKX or Managed HSM) or even self-generated and (optionally) inside the compute/container.

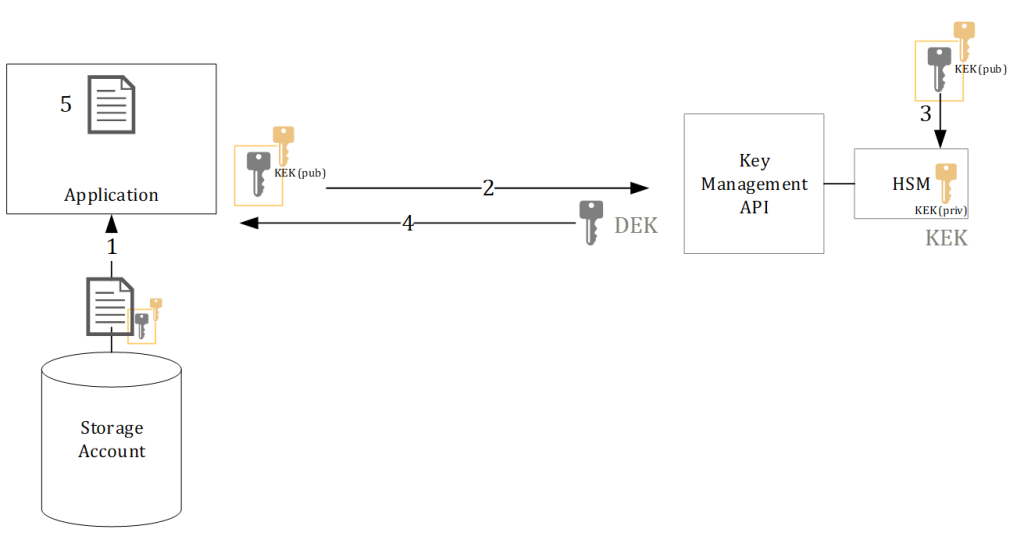

In the architecture above the details of client-side encryption are shown:

- an application retrieves an encrypted file including the encrypted DEK metadata

- the application itself calls upon an API (or even the HSM directly) and sends the encrypted DEK

- the HSM unwraps the encrypted DEK and sends the unwrapped version back to the application

- the application can load the unwrapped (unencrypted) DEK in memory and can unencrypt the data as its being used

While client side encryption provides greater security to the encrypted data (as there is no dependency anymore on the cloud provider firmware and service to decrypt the data) there are also disadvantages and possible security problems. Many cloud services do not incorporate client-side-encryption options in many of their (PaaS/SaaS) services, removing the usability and scalability of cloud.

The second problem from security perspective is the loading of the data and the DEK into the memory of an instance. More commonly, CSE is implemented in IaaS services and containers that are not running in confidential compute, would still expose the actual DEK in unencrypted form in active memory. A level of trust between the cloud provider (providing the compute and thus memory space) must still exist as theoretically the cloud provider can still make a memory dump and gain access to the DEK.

The third problem is that the HSM or Key Management Service still holds a key that can be used to decrypt the data or the key to the DEK that can decrypt the data. Ideally we’d only want to be able to perform a decryption of the data when it’s inside a trusted client. A compromise of the VM or container in a default CSE architecture running on standard compute could still lead to exposed data (for example the administrator on the VM itself, a platform agent inside the VM, or even a hardware memory dump by the cloud provider).

Confidential Compute and CSE

The only protection against an in-memory unencrypted DEK or even direct data is confidential compute. Confidential computing technology encrypts data in memory and only processes it once the application environment is verified, preventing data access from cloud operators, malicious admins, and privileged software such as the (cloud) hypervisor.

There are multiple levels of confidential computing each with additional security strengthening options. But for all of them the foundations are the same: In confidential computing the active memory space of an entire VM or individual application is encrypted and not readable for the cloud provider. The root-of-trust keys in confidential compute (the root keys used to derive the memory space encryption keys) are provided by the CPU manufacturers.

SEV-SNP and Intel TDX provide full memory encryption for VM’s. Effectively rendering the entire memory space of a VM inaccessible to outsiders including the cloud-operator.

Intel SGX allows for application specific memory space to be encrypted. The entire application process is encrypted and only available to the CPU itself. Even malicious admins, viruses or trojans would not gain access to the encrypted application memory space.

The encrypted memory space also allows customers to load their Data Encryption Keys (either symmetric or asymmetric) in their applications in an (externally) encrypted format. This means that even with the DEK’s loaded into memory, external access to the (unencrypted) DEK is not possible.

The handling of the data encryption key can be done in many ways.

- Self Generated and CPU Sealed: In Managed HSM the data encryption keys are self-generated by the instance and sealed with the CPU sealing key. Ensuring that only that instance, running on that specific CPU can read the encryption keys. Loss of the physical CPU, or changes in the underlying application code, result in a loss of the encryption keys, hence the need for 3 Managed HSM Instances that each hold a copy. And the need for the “security domain” under customer control for backup.

- Externally hosted: the application could use a standard identity to call upon an external HSM or key management service to provide an encrypted DEK to be unencrypted. The unencrypted DEK can then be used to locally decrypt the data.

- Secure Key Release: Using Secure Key Release, an application can request attestation from a 3rd party service to validate its environment (being confidential compute), and can request an Azure Managed HSM to release the private key of a key under certain strict policy conditions where the policies on that key, match the policies (and signatures) for release. The application can then securely retrieve the key and process data directly in the trusted enclave.

While option 2 and 3 might look similar, and the release of a private key outside of the HSM might go against the initial security measures of the HSM, it is actually more secure. In the so-called envelope encryption of 2 – the unencrypted DEK that is returned to the application is protected by the TLS encryption of the connection itself (usually cloud provider based). While in secure key release the “secured private key” is sent in a wrapping (KEK) key. Similar to what is used for BYOK scenario’s. As such, even protecting it beyond the TLS encryption. But more importantly there is also a validation on original requester. There might be an identity-based validation (authentication/authorization) in option 2 as well, but the actual client health (has it been compromised?) is not attested in option 2.

In Secure Key Release (3), an attestation report – validating all the hardware and TEE/Confidential Compute environment parameters – and thus the security/integrity of the application – is required.

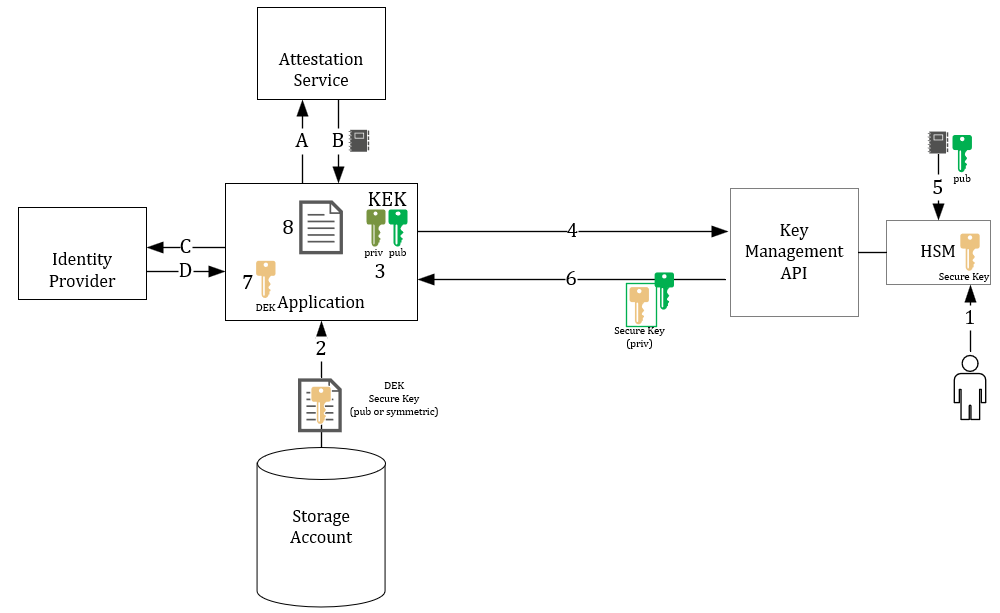

- A: As the application boots, it requests an attestation report

- B: The attestation service provides a signed report indicating the application is running in a trusted environment

- C: The application requests an authentication token for the Key Management API (managed HSM)

- D: After validation, the authentication token is released

- An administrator has (already) uploaded a specific key with a release policy to the (Managed) HSM

- Data is read from storage – it is encrypted with the Secure Key (public or symmetric)

- The application creates a Temporary Key Encryption Key (similar to BYOK)

- the Authentication, attestation token and Temporary KEK (public) are sent to the Key Management API/service

- The attestation report and KEK (public) is sent to the HSM – which will evaluate the received policy and key release policy. If policies match, the Secure Key (private) is wrapped with the KEK (public)

- The Key Management API service – returns the wrapped Secure Key (private) back to the application

- The application can unencrypt the wrapped Secure Key with the temporary KEK (private) – which never left the confidential enclave

- The application can now read encrypted data from a storage account using the Secure Key

Confidential Computing is still in early days. And therefore, is not the default (yet) in cloud services. Customer applications will need to be architected and written for it and are/should be unique to each customer. But help is available for anyone who is architecting based on this, see the links below.

Conclusion

Client Side Encryption can significantly increase the security / sovereignty of your data, however one must bear in mind that simply demanding CSE capabilities renders most of the native cloud services useless and additional efforts have to be put in the development of applications. Furthermore, in order to then still secure the encrypted data and it’s keys, the CSE should ideally be combined with Confidential Computing, else the foundational trust in the cloud provider must still reside. Depending on the trust level in your own organization, SEV-SNP/TDX VM encryption can be used, or when higher security regarding data encryption is required Intel SGX with or without Secure Key Release must be used. And this is where other cloud vendors fall short. While they do offer the option of Client-Side-Encryption – there are still many ways in which the cloud vendor can interfere to get to the actual memory space of an instance…

References:

- Client-side encryption – Wikipedia

- AMD Secure Encrypted Virtualization (SEV) | AMD

- Intel® Trust Domain Extensions (Intel® TDX)

- Intel® Software Guard Extensions (Intel® SGX)

- Secure Key Release with Azure Key Vault and Azure Confidential Computing | Microsoft Learn

- Client-side encryption for blobs – Azure Storage | Microsoft Learn

- GitHub – microsoft/confidential-sidecar-containers: This is a collection of sidecar containers that can be incorporated within confidential container groups on Azure Container Instances.