When talking about sovereignty the most heard comment is that cloud provider can replace the big hyperscalers and that its super easy to move. In this post I wanted to go a bit deeper into why I think that’s a myth on hardware basis alone.

Most “localized” cloud providers run on commodity hardware: Dell/HP/Lenovo/white box utilizing the standard x86/x64 architectures. While some also include ARM already, the architecture of these hardware platforms are focused on a mixture of workloads using “standard (enterprise) hardware components”.

If we compare this to the hyperscalers that design and implement their own hardware architectures we see a few things that are different. AWS uses their Nitro system, which is not just the hypervisor, but the family name for a few components that are equal in function to the Azure services I’m describing in this post.

Fun fact in between: The Microsoft used hardware modules for the foundation trust are open-source spec’d in contrary to the AWS Nitro counterparts.

Storage

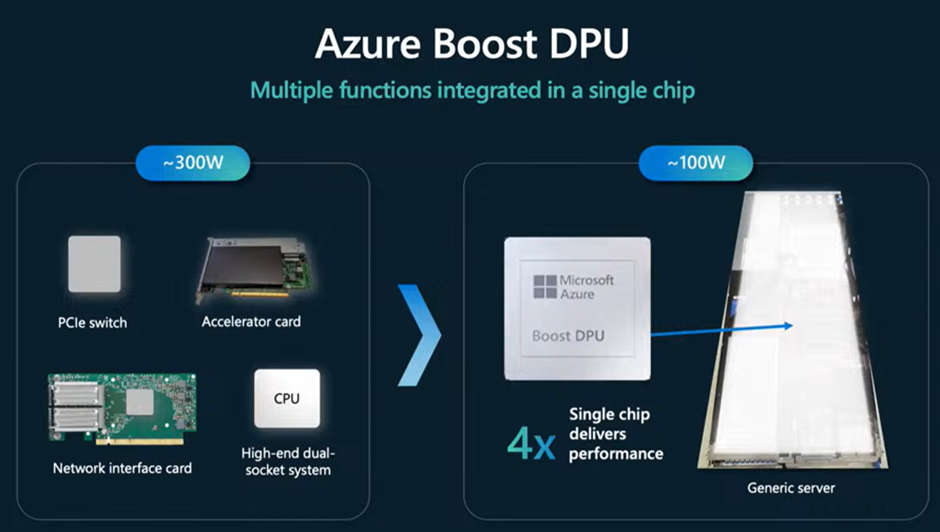

But I wanted to start with storage first. Normal storage servers (yes even SAN’s) use standard CPU’s, PCI bus lanes/switches and accelerator cards. Most vendors use Intel XEON’s or custom ASIC/FPGA’s, but their architecture doesn’t differentiate that much from a regular compute module it terms of CPU, Memory, I/O, lanes etc. And the initial Microsoft storage pods used the same architecture, but Microsoft has just released their next architecture version, a DPU. We had Central Processing Units (CPU), Graphical Processing Units (GPU), Neural Processing Units (NPU), but now also a Data Processing Units. This DPU removes all undesired components from a storage server: the network card (+firmware), the PCI switch (+firmware), the accelerator cards (+firmware) and the processor itself. This means that this single chip is capable of performing all these tasks. Optimized for storage, removing additional attack surfaces (those other components), boosting the performance by 4x, while also reducing the power required for these services by 2/3rd.

Azure Servers

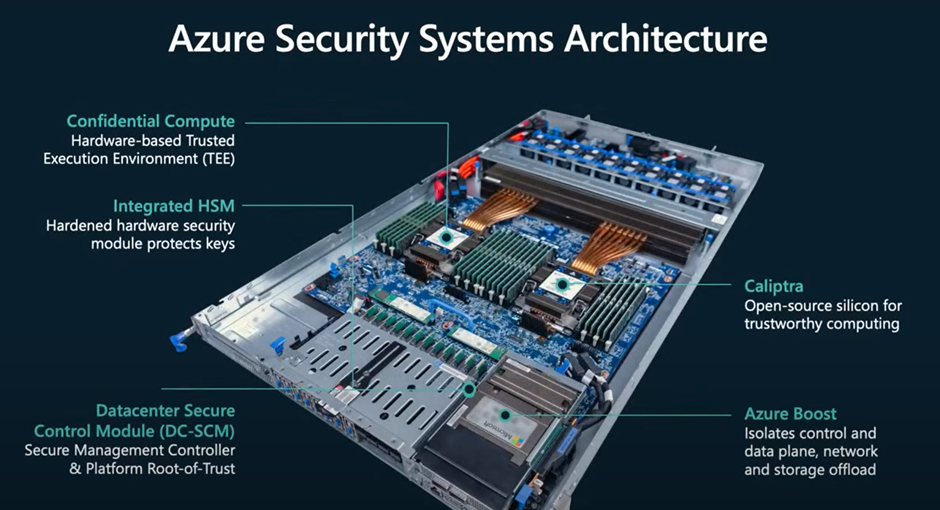

The latest Azure servers (general compute) are AMD or Intel (or ARM) based servers. The Intel and AMD processors are capable of AMD SEV-SNP or Intel TDX for Confidential computing. Nothing special here, as you could literally buy the same processors even at home. But these servers also have an integrated HSM module, a Datacenter Secure Control Module (DC-SCM), a Caliptra chip and an Azure Boost chip. Let’s take a look at why these are completely different than any hardware you can buy of the shelve and why they are important in a multi-tenant hyperscale cloud:

Integrated HSM Module

The integrated HSM module can be used for workloads that require a super-fast HSM connection. The integrated HSM module is sort of a cache that can load keys directly from the Azure Keyvault Managed HSM. Application can then perform HSM based actions locally without added latency. Confidential Computing works hand-in-hand with the integrated HSM to ensure security and linkage to the Trusted Enclave of an application or virtual machine (and no, this is not the TPM).

LINK: Securing Azure infrastructure with silicon innovation | Microsoft Community Hub

no AWS equivalent that I know of

Datacenter Secure Control Module

This thing is actually not that new. It’s been in almost every server in Azure for quite some time. The module is used to connect the server to the rest of the Azure fleet. The beauty is that this is not (compared to other hyperscalers) a proprietary chip, but actually an open-source hardware module. It is responsible for common server management, security, and control features as it moves these functions from a typical processor motherboard architecture onto a smaller common form factor module. This module contains all the FW states previously housed on a typical processor motherboard but also ensures that newer servers or “special” servers (x64 versus ARM for example) can be treated similarly by the backbone fabric.

LINK: OCP DC-SCM Specification

AWS: Nitro Hardware module

Azure Boost

When you host a lot of VM’s on a single physical computer, that physical computer becomes the bottle neck for some traffic flows. For example, under normal circumstances, the network interface is managed by the CPU, traffic flows through the host itself which can cause security but also performance problems. Azure Boost takes care of this. It offloads the traffic from the host OS and pushes the traffic directly to the hardware components. And by offloading network and storage traffic from the CPU, the host has more cycles available for actual VM’s and you benefit from increased security.

LINK: Overview of Azure Boost | Microsoft Learn

AWS: Nitro Hardware offload module

Caliptra

All of these hardware accelerators are nothing without security. Hence the Caliptra chip, again open-source based, this chip is created to handle the trust of the server. The Caliptra chip is the next generation of the Cerberus chip (also open-source) and provides the following capabilities in a tamper resistant and isolated environment, while supporting standards like Platform Firmware Resiliency (PFR) as well as Compute Express Link (CXL)

Firmware Measurement – Caliptra measures firmware components (like BIOS, bootloaders, etc.) as they load, hashing them to generate cryptographic measurements. These are compared against known good values to detect tampering.

Secure Boot and Attestation – It enables secure boot by ensuring that only authenticated and untampered firmware is executed. It also supports remote attestation, letting remote services verify a system’s firmware state using cryptographically signed measurements.

Key Management and Cryptography- Caliptra securely stores and manages cryptographic keys and performs operations like hashing, signing, and encryption, all isolated from the main CPU.

In essence, Caliptra is a hardware watchdog for platform trust, ensuring that firmware and software layers start in a known, secure state.

LINK: CHIPS Alliance Welcomes the Caliptra Open Source Root of Trust Project | CHIPS Alliance

AWS: Nitro Security Module

Conclusion

Sovereignty and Security go hand in hand. By ensuring that the hardware itself can be trusted and has no backdoors, you lay the foundation for a secure cloud that acts as the foundation to data sovereignty in a public cloud. As I’ve described hardware in cloud is “not just a server”. They are specifically designed with custom components to ensure security and performance. Furthermore, each cluster is designed for a specific workload, GPU clusters are optimized for AI or GPU intensive workloads, and even the GPU in AI are tuned for natural language to computer code and another cluster is tuned for computer to human language conversions; even AI clusters get special treatment for the function they need to execute.

In short, I’ve yet to come across a “regular” hoster -using OpenStack or equivalent- with the same deep integration into specialized hardware and focus on security and performance to the firmware and chips themselves. While the open-source chips / modules are shared with the community, only a handful of specialized hardware vendors actually use them (for their proprietary products).

Adding hardware into a hyperscale cloud is also a bit different. Rather than “racking and stacking” each individual rack, entire clusters of racks are added at once, sometimes up to twenty at a time – plug-and-play. No human intervention, no manual loading of software or adding/removing drivers. All controlled by the Azure backbone fabric itself, but perhaps more on that later.

But I might be mistaken, if so, please let me know and happy to update this post…