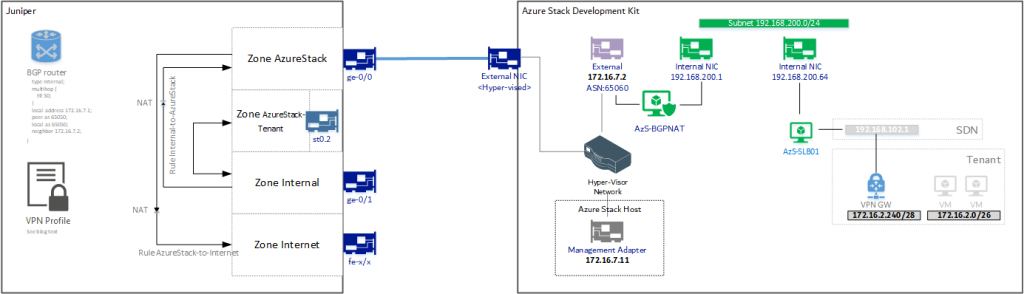

When you have Azure Stack Development Kit deployed and in Routing mode (see earlier post). You can now also create S2S VPN connections to the tenants deployed inside Azure Stack. In my configuration I used BGP for the BGPNAT to advertise the newly assigned “external” IP addresses to my Juniper so that I don’t have to add any specific routes.

This means by “external IP” address of a deployed Virtual Gateway can now connect to my Juniper and vice versa via Layer 3 connectivity. The configuration in Azure Stack Dev Kit is really easy. Deploy a virtual network, assign a GatewaySubnet and deploy a Virtual Network Gateway. Then deploy the local gateway and add the local subnets to it as well as the IP address to connect to (the Juniper). Lastly, create a connection object that states the two gateways, the type (Site-to-Site) and the pre-shared key.

There is no configuration that can be done on the IKE and IPSec negotiations. It is fully static and set to:

| IKE Phase 1 (Main Mode) Parameters | |

| IKE Version | IKEv2 |

| Diffie Hellman Group | Group 20 |

| Authentication Method | Pre-Shared Key (PSK) |

| Encryption & Hashing Algorithms | GCMAES256 |

| SA Lifetime | 28,800 seconds |

| IKE Phase 2 (Quick Mode) parameters | |

| IKE Version | IKEv2 |

| Encryption & Hashing Algorithms | GCMAES256 |

| SA Lifetime (Time) | 14,400 seconds |

| SA Lifetime (Bytes) | 819200 |

| Perfect Forward Secrecy (PFS) | PFS 2048 |

| Dead Peer Detection (DPD) | Supported |

(so we want to create a VPN tunnel between Juniper st0.2 and VPN GW (192.168.102.1)

So now we can configure the host

First, the interfaces. I assigned my Azure Stack Dev Kit a dedicated interface (172.16.7.0/24). This means the Juniper needs some reconfiguration to allow internet access and to allow my workstations to access the ASDK host as well as some configurations on the host as I switched my networks (see other post) – when you have BGP enabled from the BGPNAT01 VM, ensure to also change the BGP Peer address.

Now that the stack is on a new network, we first need to configure some basic rules to give the host internet, and to make sure we can access the host from our internal networks (for administrative purposes).

from-zone AzureStack to-zone Internet {

policy AzureStack-to-Internet {

match {

source-address any;

destination-address any;

application any;

}

then {

permit;

}

}

}

Next is the policy that allows me access from my internal systems to the Azure Stack Dev Kit host:

from-zone Internal to-zone AzureStack {

policy Internal-to-Stack {

match {

source-address onprem-networks-1;

destination-address any;

application any;

}

then {

permit;

}

}

}

Finally, for this step, in order for the Stack to get to the internet and to be able to complete the tunnel later-on, we need to enable NAT:

The first rule enables NAT from AzureStack to the internet:

source {

rule-set nsw_srcnat {

from zone [ AzureStack DMZ Internal WIFI ];

to zone Internet;

rule nsw-src-interface {

match {

source-address 0.0.0.0/0;

destination-address 0.0.0.0/0;

}

then {

source-nat {

interface;

}

}

}

}

#The second rule enables NAT from my internal networks towards the Azure Stack Dev Kit host.

rule-set AzureStack {

from zone [ DMZ Internal WIFI ];

to zone AzureStack;

rule src_nat {

match {

source-address 0.0.0.0/0;

destination-address 0.0.0.0/0;

}

then {

source-nat {

interface;

}

}

}

}

}

Now that our Azure Stack Dev Kit has internet and we are able to connect to it, we can install Azure Stack Dev Kit if not done before. Finalize the installation and create the plans, offers, subscriptions and the rest.

and created the tunnel interface as st0.2

st0 {

…

unit 2 {

description AzureStackTunnel;

family inet;

}

}

Next is the static route:

routing-options {

static {

…..

route 172.16.2.0/24 next-hop st0.2;

}

}

And then the configuration of the tunnel itself

security {

ike {

proposal stack {

authentication-method pre-shared-keys;

dh-group group20;

authentication-algorithm sha-384;

encryption-algorithm aes-256-cbc;

lifetime-seconds 28800;

}

policy stack-policy {

mode main;

proposals stack;

pre-shared-key ascii-text "$9$IUrEreM8X-bsW8Di"; ## SECRET-DATA

}

gateway stack {

ike-policy stack-policy;

address 192.168.102.1;

external-interface ge-0/0/0;

version v2-only;

}

}

ipsec {

proposal AzureStack {

protocol esp;

encryption-algorithm aes-256-gcm;

lifetime-seconds 14400;

lifetime-kilobytes 819200;

}

policy AzureStack {

perfect-forward-secrecy {

keys group14;

}

proposals AzureStack;

}

vpn stack {

bind-interface st0.2;

ike {

gateway stack;

ipsec-policy AzureStack;

}

}

}

Then you define the zones, now there are 2 zones to keep in mind.. The “public” zone for the Azure Stack Dev Kit host and all L3 routable addresses inside that zone (172.16.7.0/24, 192.168.200.0/24, 192.168.10x.x/x). The second zone is the Virtual Network subnet that you defined (in my case 172.16.2.0/24).

In my case I called these AzureStack (for the first one) – which is bound to the physical interface on the Juniper (ge-0/0/0.0).

security-zone AzureStack {

host-inbound-traffic {

system-services {

ike;

}

}

interfaces {

ge-0/0/0.0 {

host-inbound-traffic {

system-services {

ping;

dhcp;

dns;

ike;

}

}

}

}

}

And AzureStack-Tenant (for the second one) which is bound to the tunnel interface created earlier (st0.2)

security-zone AzureStack-Tenant {

address-book {

address Tenant-172-16-2-0-24 172.16.2.0/24;

}

host-inbound-traffic {

system-services {

ping;

}

}

interfaces {

st0.2;

}

}

Note that for the AzureStack zone (the first one) you need to allow ike inbound traffic to establish the tunnel.

Finally, you need to allow traffic from the different zones in multiple security policies

And then there are two policies to allow the AzureStack-Tenant to access the internal network:

from-zone AzureStack-Tenant to-zone Internal {

policy Tenant-to-Internal {

match {

source-address Tenant-172-16-2-0-24;

destination-address onprem-networks-1;

application any;

}

then {

permit;

}

}

}

from-zone Internal to-zone AzureStack-Tenant {

policy Internal-to-AzureStack-Tenant {

match {

source-address onprem-networks-1;

destination-address Tenant-172-16-2-0-24;

application any;

}

then {

permit;

}

}

}

Now there are two things to note in the configuration for the policies. First, the 172.16.7.0/24, 192.168.200.0/24 and the 192.168.10x.x networks are never specified. This is because we treat these addresses as “external” for our Juniper. The BGP advertisement ensures the correct routing over my Juniper 172.16.7.1 interface.

Second is that for the AzureStack-to-Internal there is no policy. This is because my Juniper will treat these inbound connections as external. We also included a NAT translation for internal-to-AzureStack and as such it makes no sense to do a route-based to internal connection.

And there you have it.. your Azure Stack Dev Kit, now fully connected to your corporate network and the ability to have S2S VPN’s from your subscriptions to your internal networks!